What is DeepSeek?

It’s a Chinese startup that appeared in 2023 and jumped headfirst into the big language neural network scene with a whole family of DeepSeek models that specialize in coding, math, and reasoning. Basically, it’s like OpenAI’s GPT choosing to be a techie. The neural network has several options

- DeepSeek V2.5 is a large open-source language model that can be considered a competitor to GPT-4, LLaMA3-70B, and Mixtral 8x22B. At the time of this writing, V2.5 is the most current version. It supports contexts up to 128,000 characters long.

- DeepSeek Coder V2 is the latest version of the DeepSeek model, tailored for writing code. It uses the multi-expert architecture MoE (Mixture of Experts), which allows you to break a complex task into several simple ones and solve them in parallel. Unlike Mixtral, it was not trained from scratch, but was obtained by merging several pre-trained versions of DeepSeek. Coder V2 is part of DeepSeek V2.5; before this version, the models existed separately.

- DeepSeek Math is a model for working with mathematical problems. It is usually not used separately, it is included in DeepSeek Coder version V1.5 and higher.

- DeepSeek VL is a model that reads what is depicted in a picture and gives a text description. It is able to take into account inscriptions, diagrams and other text in natural language. By itself, it will be of little use to anyone, but as an aid to another neural network in determining what is in a picture sent by the user, it is quite useful.

When talking about writing code, it is worth paying attention to the first two models.

Where to try DeepSeek

There are several options to launch a neural network.

Official website

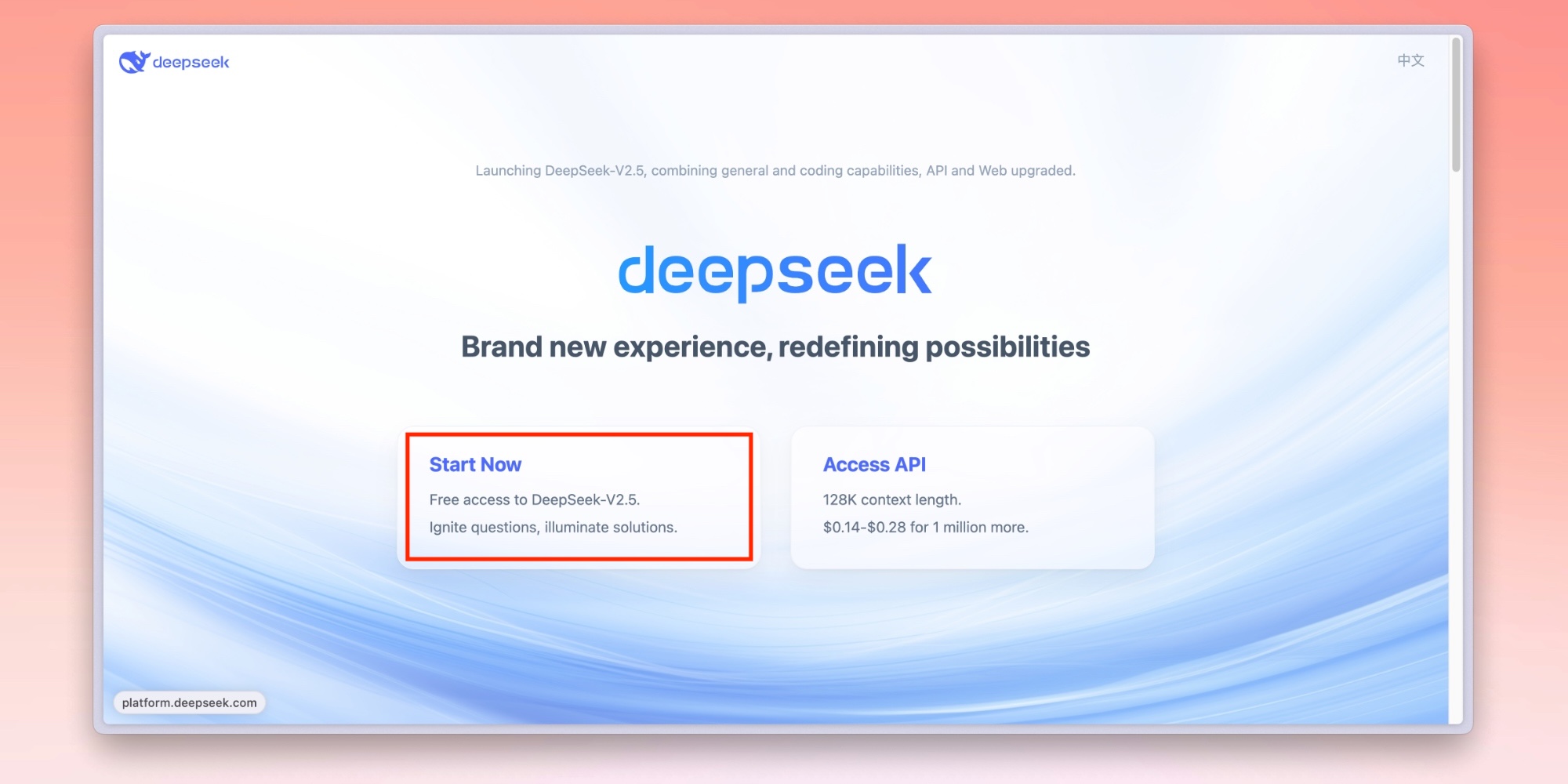

The easiest option is the chatbot on the official website. It’s easy to get access: go to the DeepSeek page , click Start Now and log in via email or Google account.

Immediately after this, the chatbot window will open. Everything works on DeepSeek V2.5 with a division into two hypostases: a universal assistant and an assistant with a code. In fact, the distribution between the two chats is quite conditional, because both can edit the code and select recipes for dinner .

This is more of a way to separate the wheat from the chaff, so as not to clog the context with information of different directions. Chats can be used in parallel, for example, to discuss everyday issues with DeepSeek, and work issues with Coder.

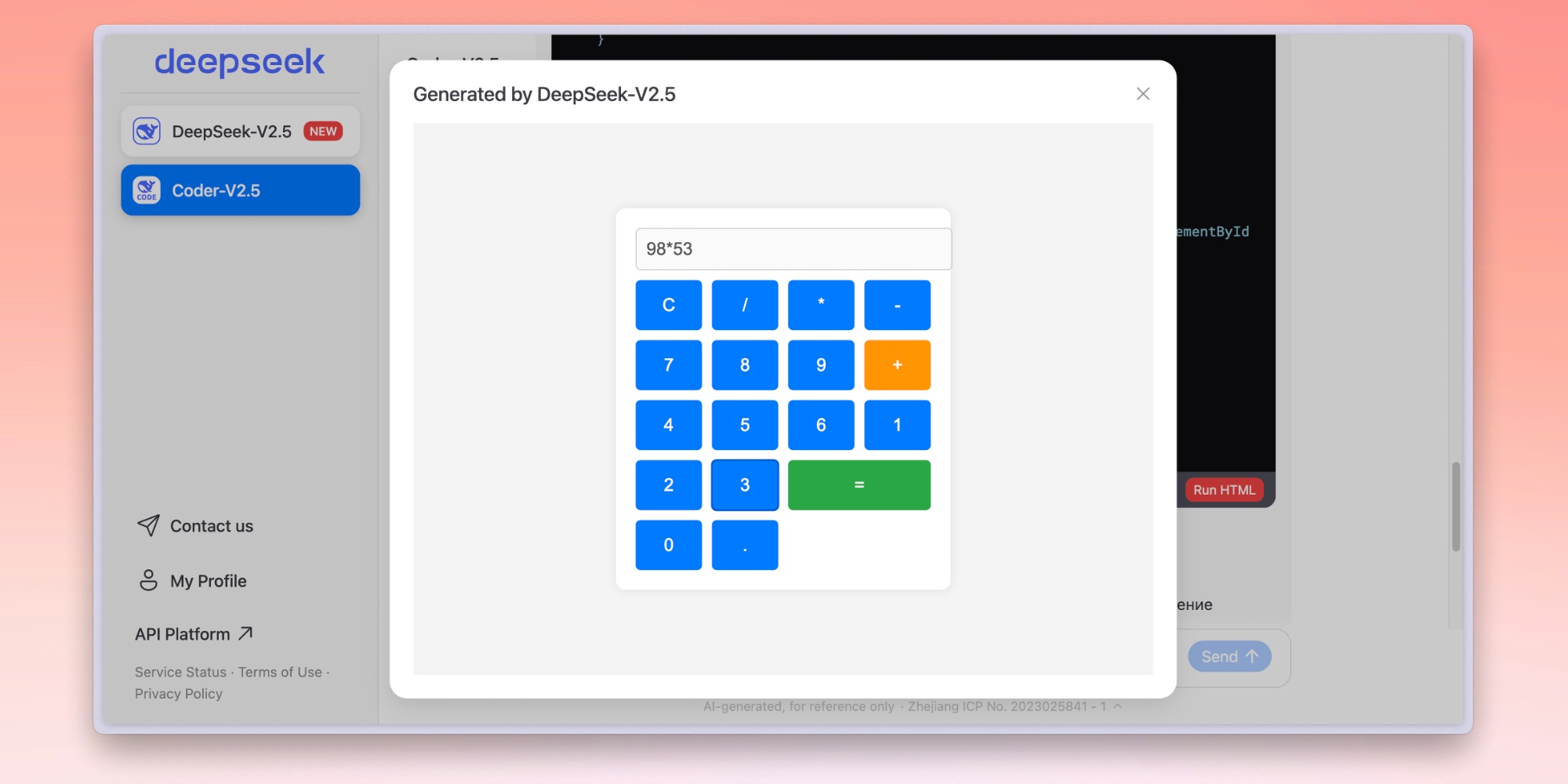

The only functional difference between the Coder chat and the regular DeepSeek is the ability to run HTML code directly from the bot: a pop-up window opens in which you can check what the neural network has produced.

Initially, the bot writes in English, but if you write a request in another language, it will immediately switch. This also works with Russian.

Queries to DeepSeek can be written following all the canons of ChatGPT prompting : break complex questions into a chain of simpler sequential ones, provide examples and context. Speaking of context: the bot keeps 4,096 tokens in memory (each token is about 3-5 characters), so you can feed it a solid chunk of code before asking for recommendations.

The bot will help you supplement your code, find and fix errors, and improve and simplify what you’ve already written. DeepSeek Coder V2 understands 338 programming languages, so you can write in almost anything.

Other options

If for some reason the site is not suitable, there are several other options for accessing DeepSeek Coder.

- Installation on a computer. Files and instructions are on the developers’ GitHub : a full-fledged Coder V2 model and a lightweight Lite version are offered. Both have two options for maintaining correspondence ( Base and Instruct ), but differ in the number of parameters: 16B in Lite and 256B in the standard (B is billions). The older model, as expected, is gluttonous: the system requirements indicate 8 × 80 GPU, so only Lite is suitable for personal use.

- Launch on a remote computer via Hugging Face. There are two versions of DeepSeek Coder available: 7B and 33B. The number indicates the number of billions of parameters, both options belong to the first generation of the model. You can also try DeepSeek VL in this mode. To launch, open the DeepSeek page on Hugging Face and select the desired model in the Spaces section. Everything will work noticeably slower than with a local installation and via a chat bot on the site.

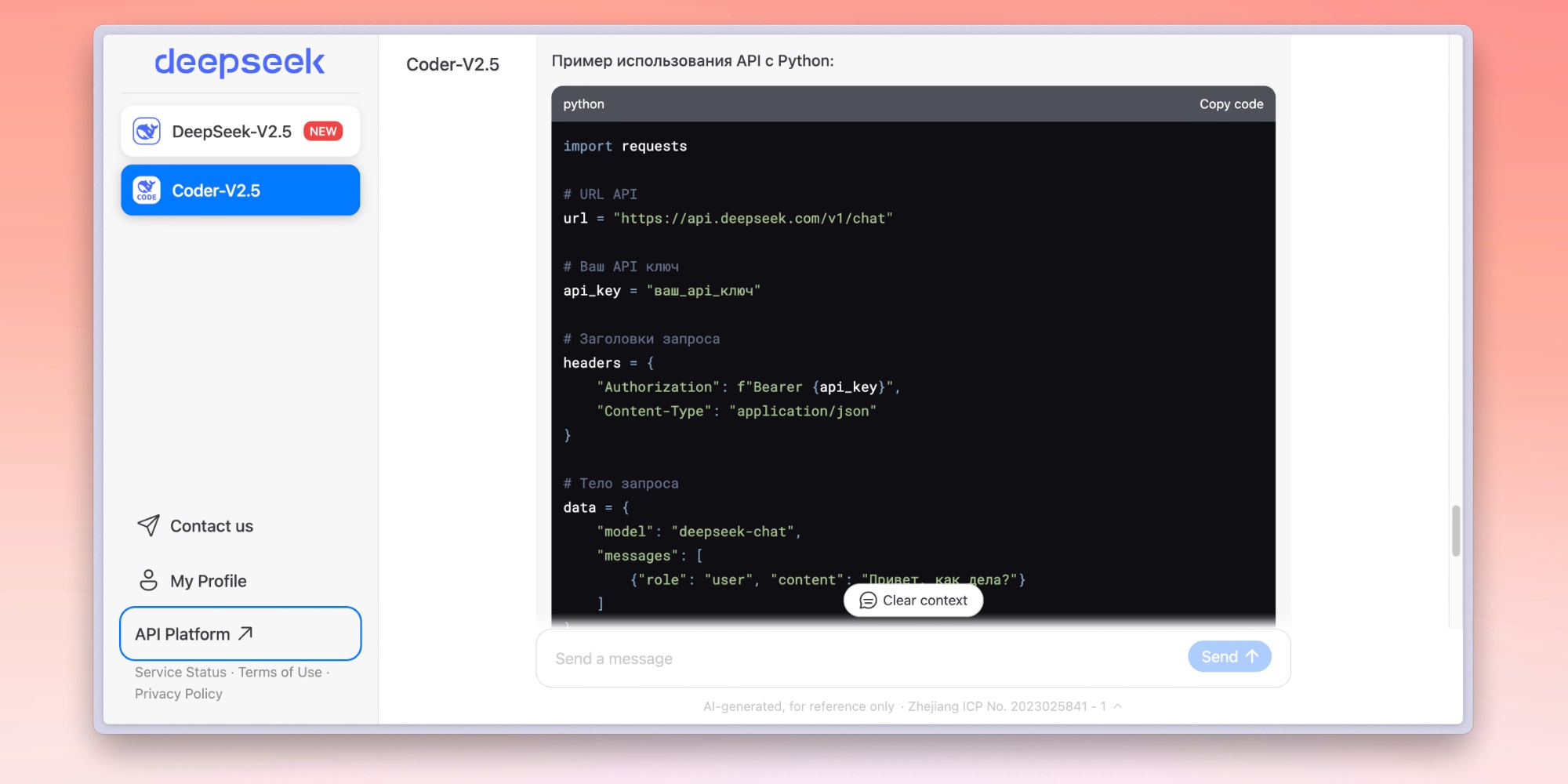

- Use via API. In this case, requests will be processed on DeepSeek capacities, and the context will increase to 128 thousand characters. To create your own chatbot or integrate into a project, you need to get a key to DeepSeek Platform and install the necessary libraries. Integration into popular programs and extensions is supported, including VS Code.

How much does it cost?

The DeepSeek chatbot can be used completely free of charge and without restrictions, the local launch of the DeepSeek Coder V2 model is also free. Moreover, we are talking about both research and commercial use.

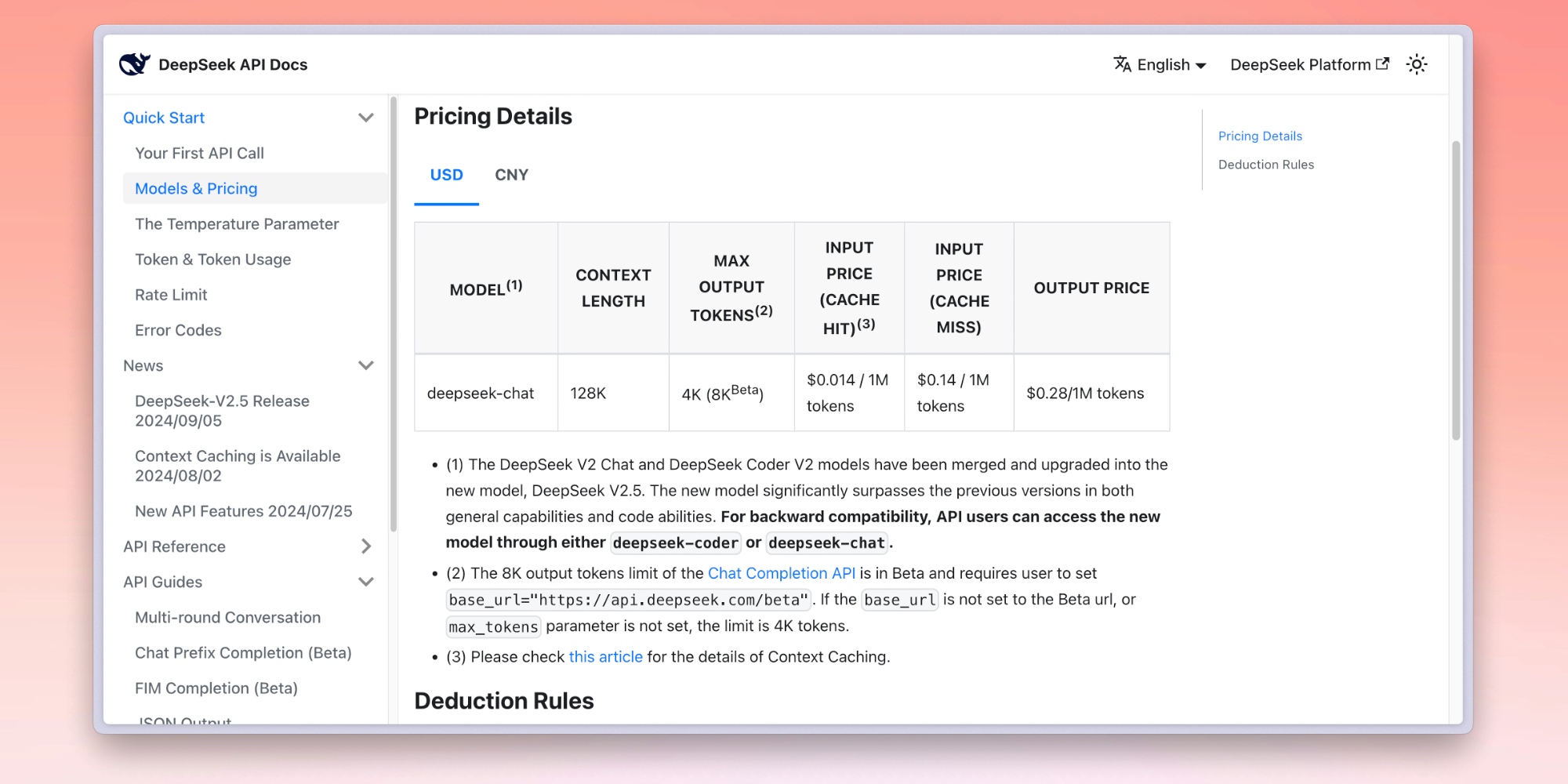

The creators ask for money only when working through the API: from 1.4 to 14 cents per million tokens for input and 28 cents per million tokens for output. A token is a word or a piece of it, into which the model breaks down the request for further processing or outputs as a response.

To work with the API, you need to top up your balance in advance. You can do this via a bank card or PayPal, but you cannot pay with Russian cards.

Leave a Comment